Personnes présentes

- Idir Ait Sadoune

- Véronique Benzaquen

- Frédéric Boulanger

- Évelyne Contejean

- Quentin Garchery

- Chantal Keller

- Hai Nguyen Van

- Safouan Taha

- Burkhart Wolff

- Lina Ye

Handling Ontologies within Isabelle/PIDE

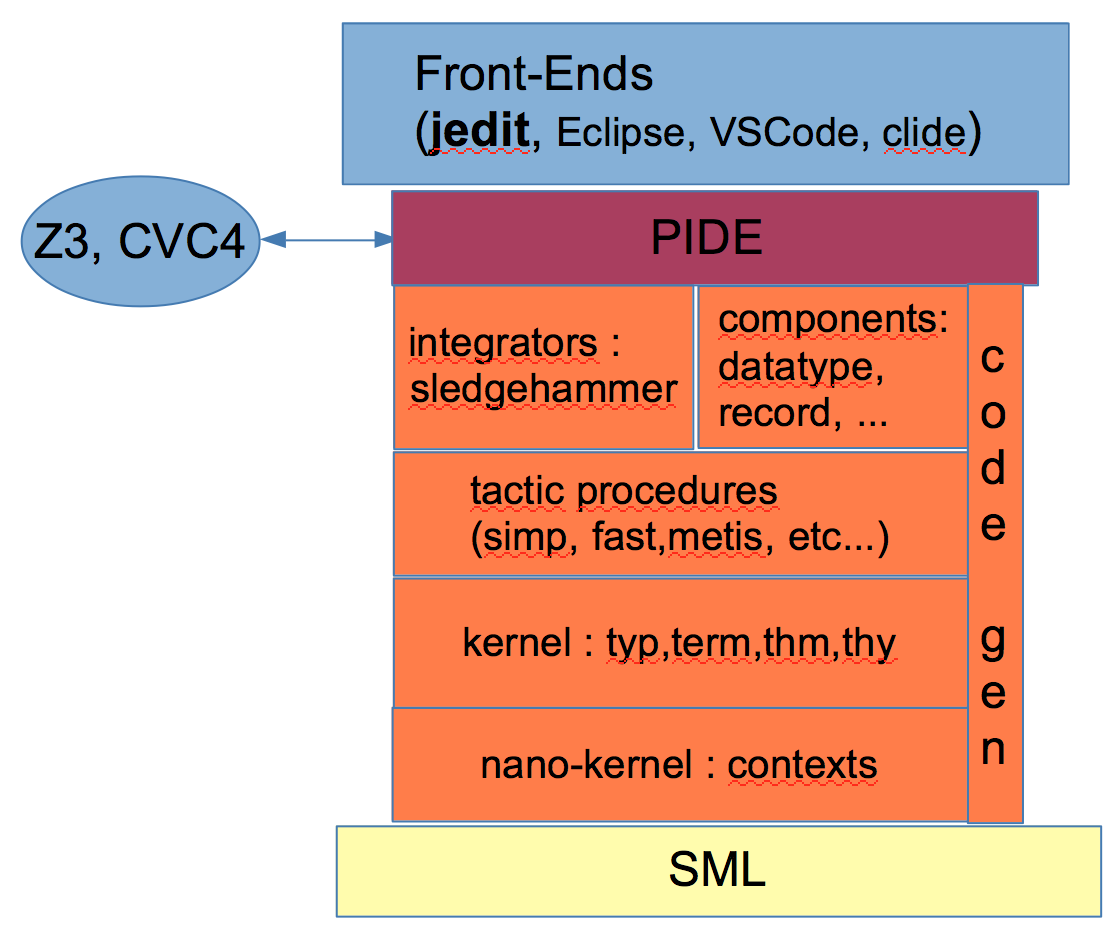

Recall: Aspects of the Isabelle Architecture

Burkhart recalls the architecture of Isabelle/PIDE. Isabelle is a logical framework with many layers, ML at the bottom layer as the implementation language.

The architecture actually foresees a "Nano-Kernel" (terminology bu) which resides in the SML structure Context.ML; it provides a kind of container with the features:

- contexts can "import" others in an acyclic way (DAG structure)

- there is an API allowing to register user-defined typed memory space in contexts

- there is an API allowing to register user-defined transactions on contexts

This general mechanism allows the Isabelle/Isar framework to be both highly concurrent (as long as the user-defined transition actions are purely functional on registered state) and yet provide a global undo/reset/re-evaluate mechanism necessary in an *interactive* architecture allowing a user to provide a stream of increments on the system input.

In this nano-kernel layer, the classical LCF kernel registered, over which tactics were programmed, as well as more sophisticated proof engines. On the top the we have a the Prover-IDE (PIDE) layer, which is nowadays a quite substantial system component of several 10 kloc's of size in Scala and ML. For PIDE, several editor plugins have been developed, where jEdit is the most commonly known (more experimental alternatives are Eclipse, Visual Studio Code and clide, a pure web-based interface).

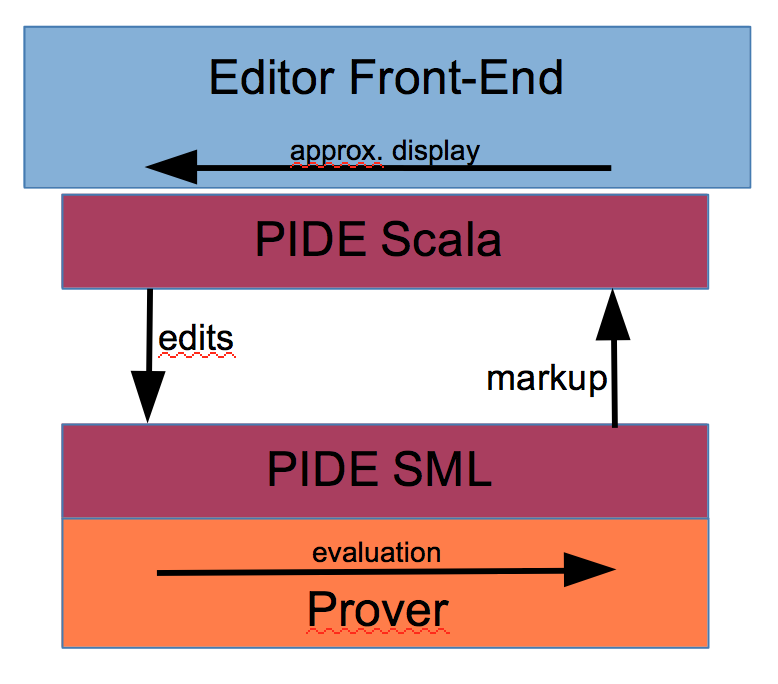

The low-levelnano-kernel allows a fundamentally asynchronous interaction with the user:

... where editing increments of the user where send via pipe communication from the scala-PIDE part (hooked into the plugin of the editor) to the SML part (hooked to the prover). They trigger a multitude of (in large parts concurrent) computations in the prover to re-evaluate the modified document in an adapted way, resulting in a stream of markup containing representation information. This captures colorings (for bound variables or constants), hovering-information (for types of sub-expressions) and linking information (permitting a hypertext-like navigation from application occurrences to defining occurrences) in the global document consisting of a multitude of files.

The interface filters the incoming stream of markup with respect to freshness and relevance for the user.

Writing user-defined Plugins in Isabelle

Write an own plugin in Isabelle starts with defining the local data and registering it in the framework. As mentioned before, contexts are structures with independent cells/compartments having three primitives init, extend and merge. Technically this is done by instantiating a functor Generic_Data:

structure Data = Generic_Data ( type T = docobj_tab * docclass_tab val empty = (initial_docobj_tab, initial_docclass_tab) val extend = I fun merge((d1,c1),(d2,c2)) = (merge_docobj_tab (d1, d2), merge_docclass_tab(c1,c2)) );

with some tables docobj_tab and docclass_tab capturing the specific data of the application in mind, i.e. a table of document classes and another one cpaturing the document class instances.

Note that all the text samples here have to be in the context of an SML file or in an

ML{* ... *}

command inside a .thy file.

Operations are organized in a model-view-controller scheme, where Isabelle/Isar provides the controller part. A typical model operation has the type:

val opr :: <args_type> -> Context.generic -> Context.generic

i.e. it represents a transformation on contexts. For example, the operation of declaring a local reference in the context is presented as follows:

fun declare_object_local oid ctxt = (map_data (apfst(fn {tab=t,maxano=x} =>

{tab=Symtab.update_new(oid,NONE) t,

maxano=x}))

(ctxt)

handle Symtab.DUP _ =>

error("multiple declaration of document reference"))

where exceptions where handled and translated into system-global errors here, and a number of combinators like map_data as well as operations from a library structure Symtab were used to update the appropriate table in the plugin-local state.

Finally, the view-aspects where handled by an API for parsing-combinators. The library structure Scan provides, for example, the operators:

op || : ('a -> 'b) * ('a -> 'b) -> 'a -> 'b;

op -- : ('a -> 'b * 'c) * ('c -> 'd * 'e) -> 'a -> ('b * 'd) * 'e;

op |-- : ('a -> 'b * 'c) * ('c -> 'd * 'e) -> 'a -> 'd * 'e;

op --| : ('a -> 'b * 'c) * ('c -> 'd * 'e) -> 'a -> 'b * 'e;

op >> : ('a -> 'b * 'c) * ('b -> 'd) -> 'a -> 'd * 'c;

op option: ('a -> 'b * 'a) -> 'a -> 'b option * 'a

op repeat: ('a -> 'b * 'a) -> 'a -> 'b list * 'a

for alternative, sequence, sequence-ignore-left, sequence-ignore-right, and piping, as well as combinators for option and repeat. The parsing combinator technology arrived meanwhile even in mainstream languages such as Java or Scala and is nowadays sufficiently efficient implemented to replace conventional Lex-Yacc technology for most applications. It has the advantage to be smoothlessly integrated in standard programs and allows for dynamic grammar extensions. There is a more high-level structure Parse providing specific combinators for the command-language Isar:

val attribute =

Parse.position Parse.name

-- Scan.optional (Parse.$$$ "=" |-- Parse.!!! Parse.name) "";

val reference =

Parse.position Parse.name

-- Scan.option (Parse.$$$ "::" |-- Parse.!!! (Parse.position Parse.name));

val attributes = (Parse.$$$ "[" |-- (reference --

(Scan.optional(Parse.$$$ "," |-- (Parse.enum "," attribute))) []))

--| Parse.$$$ "]"

"Model" and "View" elements were combined to parsers which were registered in Isabelle/Isar framework:

ML{*

val _ =

Outer_Syntax.command @{command_keyword "declare_reference"} "declare document reference"

(attributes >> (fn (((oid,pos),cid),doc_attrs) =>

(Toplevel.theory (DOF_core.declare_object_global oid))));

*}

Altogether, this gives the extension of the Isar syntax allowing to parse and interpret the new command in a subsequent .thy file''':

declare_reference [lal::requirement, alpha="main", beta=42]

where we ignore the semantics at the moment. The construction also generates under-the-hood some markup information; for example, when hovering over the declare_reference in a Front-End supporting PIDE, a popup window with the text: "declare document reference" will appear.

From Literate Specifications to Formal Content via Antiquotations

Isabelle/Isar is configured with a number of standard commands to annotate formal definitions and proofs with text --- the latter is used in particular to generate .pdf and .html documents with internal hypertext-references.

section{* The is an example section *}

text{* Lorem ipsum dolor sit amet, aenean nam vel nulla suspendisse, per arcu mauris,

pellentesque eros urna sapien vel...

*}

will be converted by the Isabelle document generation process via an equivalent typesetting commands

in LaTeX into the usual .pdf format widely used in scientific typesetting. Standard references to

sections, figures, definitions can be added by inserting the usual LaTeX-commands and packages,

for example \autoref{sec:intro} stemming from the hyperef package. However, erroneous links

can only be detected after the validation phase of the Isabelle document and only on the generated

LaTeX source, which makes the debugging process long and tedious for non-trivial documents.

Inside text commands (i.e. inside a 'text context'), Isabelle/Isar offers a particular mechanism to allow for the smooth integration of formal content into text, i.e. text entities with a formal structure or links to entities with formal structure. For example,

text{* In the reflexivity axiom of HOL, which has the form @{thm refl}, ... *}

will display the text

'In the reflexivity axiom of HOL, which has the form x = x, ...'

so the reference to the reflexivity theorem has been replaced by a presentation of the

'content' of the reference refl. The coherence between the reference refl and its

use in the text-command is checked at editing time, enforcing that refl is indeed a legal

name for az theorem with a particular presentation. Since these checks are part of the global

validation of Isabelle of a document (misspellings are treated actually as well as errors as errors in proofs),

turning informal text information into formal content

via antiquotations makes the document more robust under change in the (potentially distributed)

evolution of the document.

Recent versions of Isabelle/HOL come with a number of antiquotations with a fairly rich language of options such as:

text{* The axiom of name @{thm [source] refl} has the form: @{thm refl}

@{thm[mode=Rule] conjI}

@{file "../../Isa_DOF.thy"}

@{value "3+4::int"}

@{const hd} @{theory List}}

@{term "3"} @{type bool}

@{term [show_types] "f x = a + x"} *}

These antiquotation enforce checks that a file actually exists, will display the result of the computation 7 check the validatity of type-expressions inside a text, and so forth.

More details on the fairly rich text antiquotation language can be found in the accompanying documentation sugar.pdf and, in particular, isar-ref.pdf section 4.2.2. pp 79 (Version Isabelle 16-1).

Design Principles for the Isabelle Ontology Framework Isa_DOF

We made a number of design-decisions for our implementation:

- the entire Isa_DOF is conceived as Pure-Plugin, i.e we deliberately resign on the possibility to modify Isabelle itself (thus accepting a minor loss in performance and some additional complexity in the documentation generation process)

- we decided to identify the ontology types with the Isabelle/HOL type system, i.e. we reuse the same infrastructure for parsers and type-checkers, but use the types on the meta-level of the document structure

- we decided to make the markup-generation by own means in order to adapt it as best as possible to the needs of tracking the linking in documents.

Document Ontology Modeling with Isa_DOF

Burkhart introduces an own Isar-supported language to define ontologies. Ontologies consist of:

- a document class that describes a concept;

- attibutes specific to document classes;

- attributes should be typed (arbitrary HOL-types);

- attributes can refer to other document classes, thus, document classes must also be HOL-types (Such attributes were called links);

- a special link, the reference to a super-class, establishes an is_a relation between classes;

- classes may refer to other classes via a regular expression in a where clause (classes with such an optional where clauses are called 'monitor classes);

- attributes may have default values.

A document class represents set of instances of a document class, i.e. references to document elements.

For ontology modeling, we chose a syntax roughly similar to Isabelle/HOL's extensible records. We present the syntax implicitely by a little example, the document ontology for a scholar paper:

doc_class author =

affiliation :: "string"

doc_class abstract =

keyword_list :: "string list" -- None

doc_class text_section =

main_author :: "author option" -- None

doc_class introduction = text_section +

comment :: string

doc_class technical = text_section +

definition_list :: "string list" -- "[]"

doc_class example = text_section +

comment :: string

doc_class conclusion = text_section +

main_author :: "author option" -- None

doc_class related_work = conclusion +

main_author :: "author option" -- None

doc_class bibliography =

style :: "string option" -- "''LNCS''"

doc_class article =

trace :: "(title + subtitle + author+ abstract +

introduction + technical + example +

conclusion + bibliography) list"

where "(title .

[subtitle] .

(author)+ .

abstract .

introduction .

(technical | example)+ .

conclusion .

bibliography)"

Components of Isa_DOF so far

Burkhart implements in the Isabelle/Isar framework (shallow, if one wants, but not in the sense of a shallow embedding in HOL)

- syntax and semantics for

doc_classparser parsing Isabelle commands as the ones above - syntax and semantics of a new family of text-commands adding syntax to declare meta-information of a text element

- a generator of antiquotations implemented inside the

doc_classparser.

The doc_class parser uses internally the standard Isabelle record-package but restricts it to

ground types (no parametric doc_classes so far) and adds own information for our application, which is:

- structural information,

- the key's for generated markup,

- the defaults,

- the regular expression syntax for monitor classes,

- etc.

The meta-information porting text-commands are distinguished by an additional *-postfix of the

command names:

chapter*[<meta-args>]{* <text> *}

section*[<meta-args>]{* <text> *}

subsection*[<meta-args>]{* <text> *}

text*[<meta-args>]{* <text> *}

which allows, for example, to specify

text*[intro:introduction, main_autor="bu"]{* This is going to be revolutionary. *}

which generates antiquotations as used as follows:

text{* As already mentioned in the @{introduction <intro>}, this is fantastic. *}

Note that both the textual representation of the antiquotation is checked formal content, as well as the linking and the ontological consistency:

@{introduction <example1>}

Even assuming that example1 is a declared reference to a text element belonging to the class example, the above antiquotation is still refused as ontologically inconsistent.